When I first shared this talk at one of Harver’s Weekly Design Talks for our Product and Engineering teams, I wanted to make sense of what it means to design with AI. What started as curiosity about how Large Language Models (LLMs) work eventually became a reflection on how we think with it.

I’ve removed some internal information and made a few adjustments to make the flow better. But overall, it still reflects the original structure and intent of my talk.

Apple recently released a study titled "The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity".

This study reveals that “reasoning-enabled” Large Reasoning Models (LRMs) offer only an illusion of deeper thinking. Once tasks become more complex, these models start making more mistakes.

Apple’s research seems to push the industry conversation away from “How big can models get?” to “How deeply can they really reason?", a necessary shift as we learn to work with these systems and use it more thoughtfully.

Another study that caught my attention why researching on the effects of AI use was from CHI 2024: Conference on Human Factors in Computing Systems titled “AI enhances our performance, I have no doubt this one will do the same": The Placebo Effect is Robust to Negative Descriptions in AI." It explored what researchers called the Placebo Effect in AI.

The observation was that participants consistently believed that AI made them faster and more accurate even when told the AI might harm their performance. The key finding: belief in AI’s capability improves outcomes more than the system’s actual performance. This study also reveals that belief in AI assistance can produce measurable performance gains even when users are told the AI may harm them, highlighting the profound influence of expectations on human-AI interaction.

My exploration of AI wasn’t planned. It started when my husband, Ademar, began his Master’s in Artificial Intelligence at the University of Waikato.

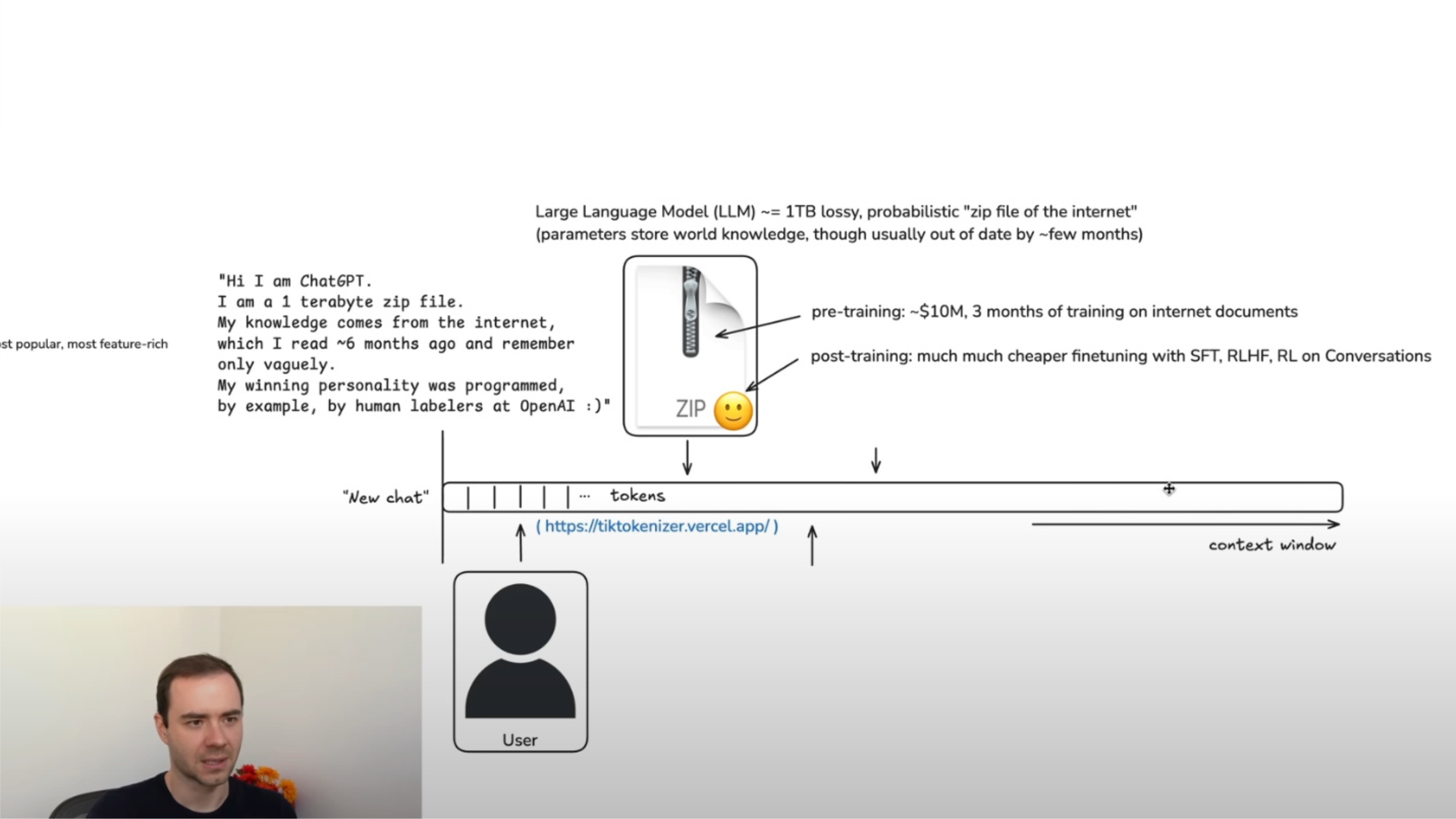

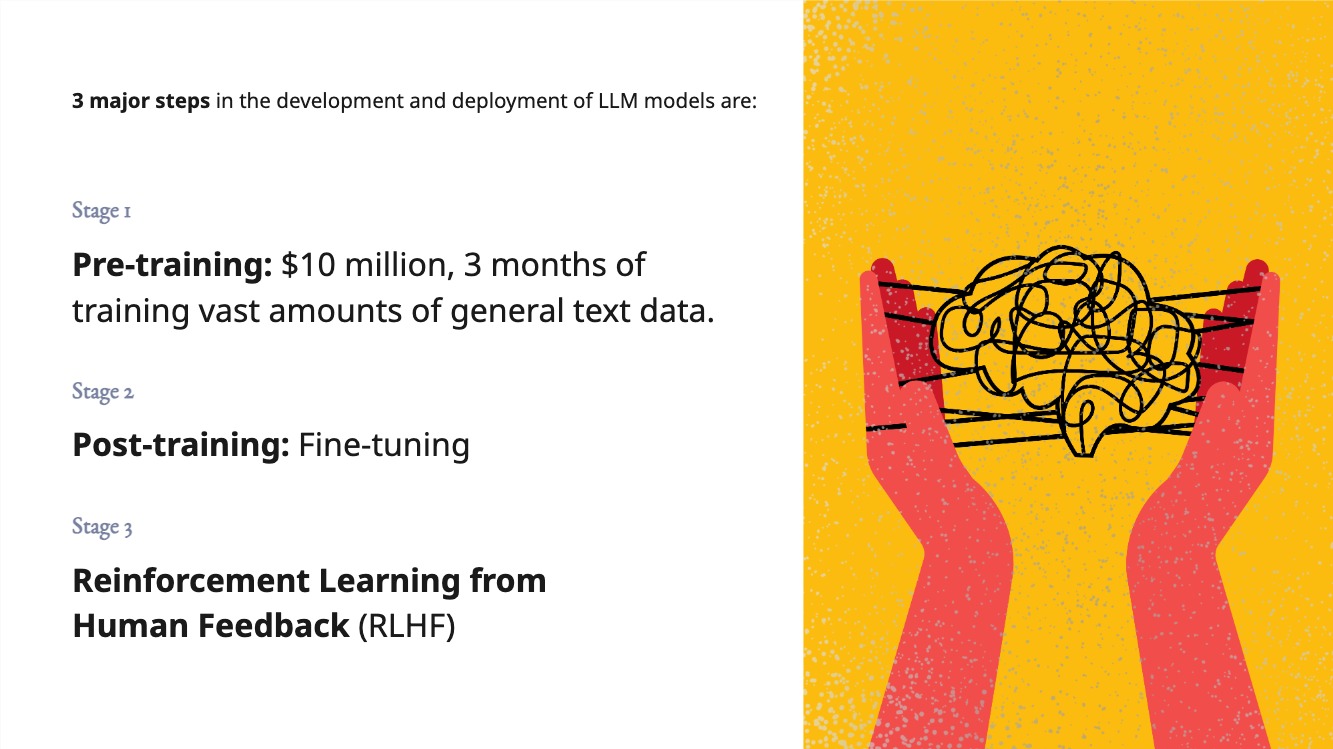

I helped him rebuild his academic website and I found myself immersed in his reading lists and slowly, in this world of AI. He walked me through the basics of machine learning, how LLMs work and even pointing me to lectures by Andrej Karpathy, whose breakdown of how language models process context changed the way I viewed my daily interactions with tools like ChatGPT or Copilot.

.jpg)

.png)

So the real question is... Is AI controlling our thinking? Or are we learning to think through it?

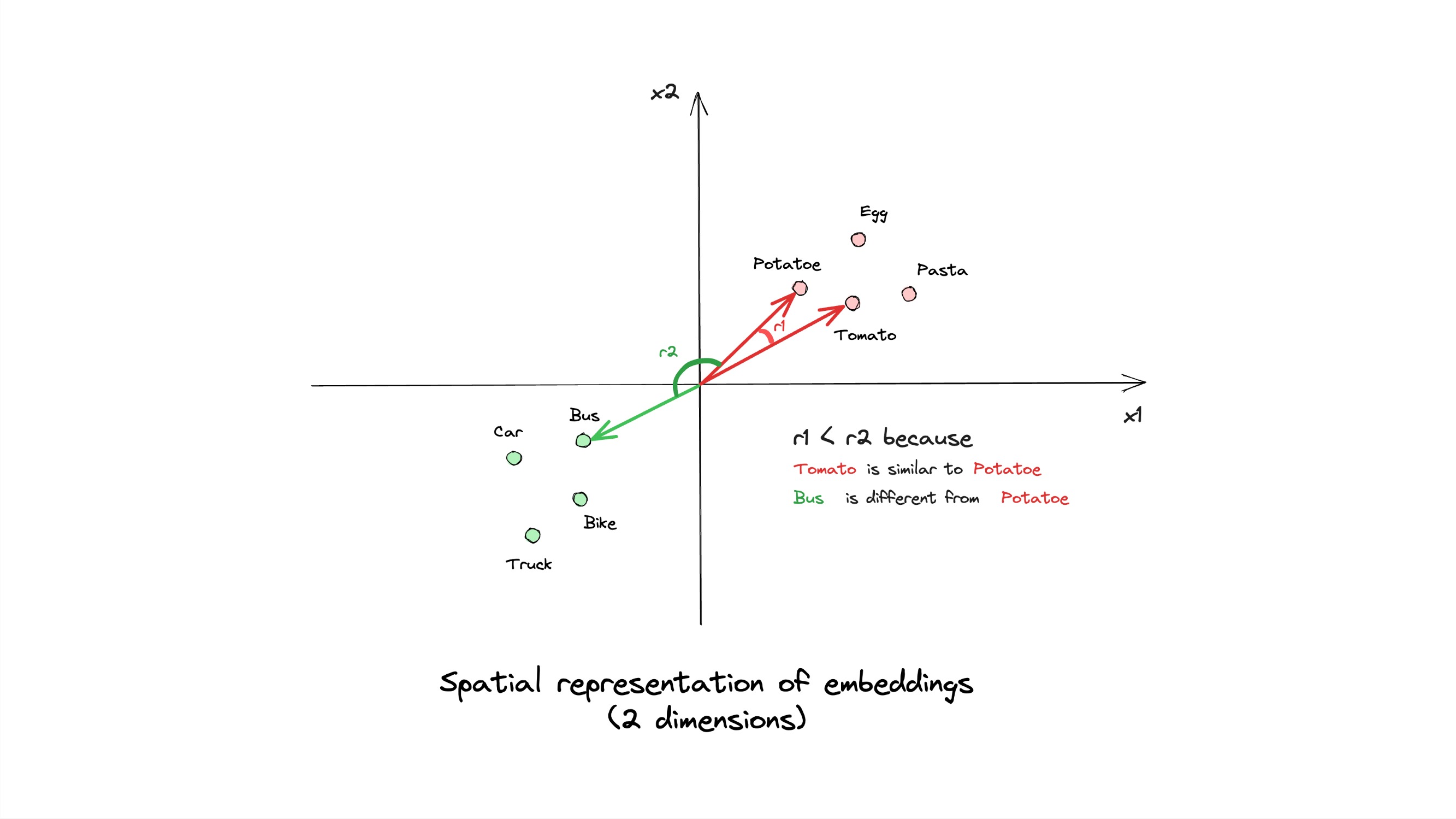

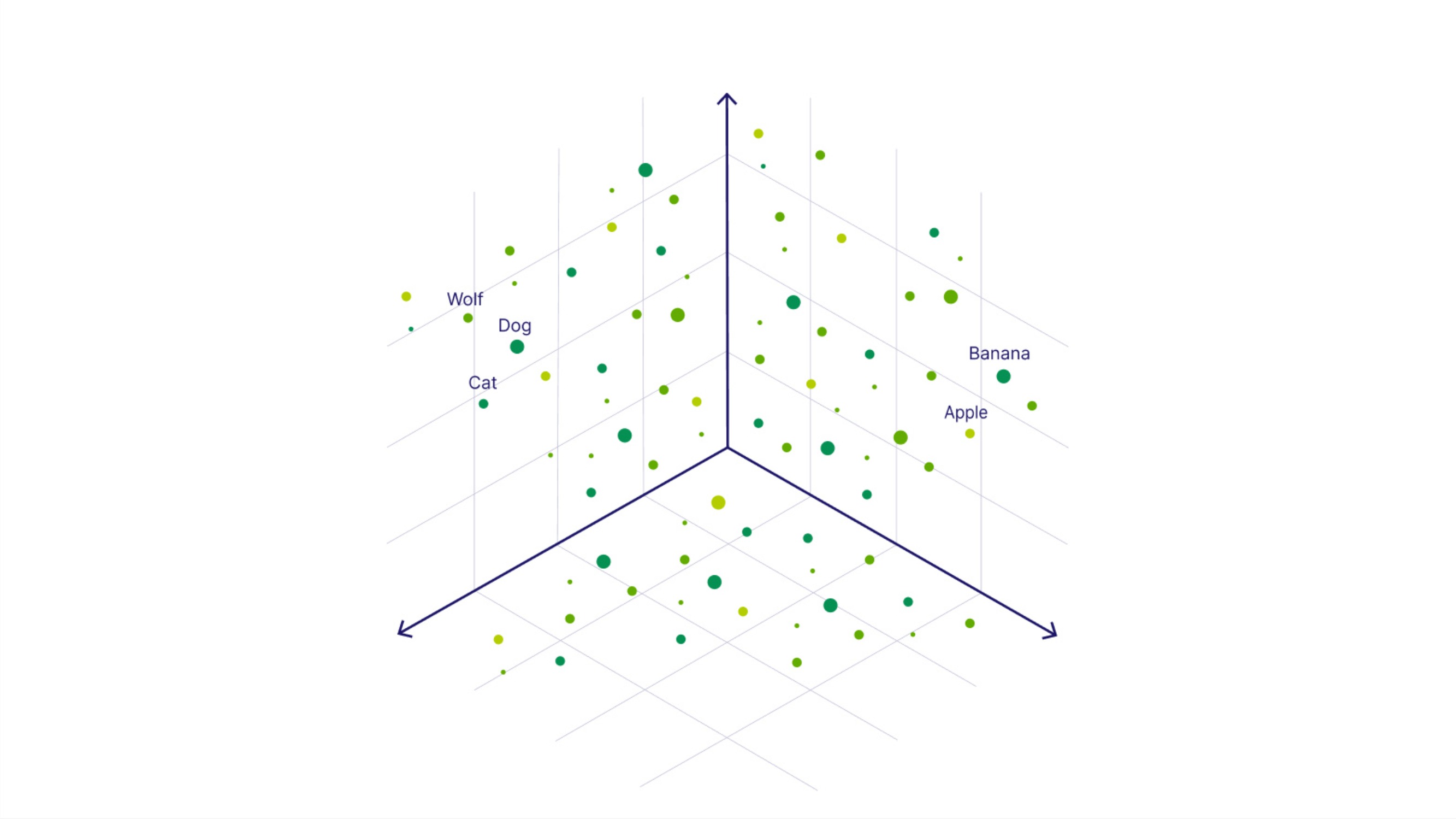

Knowing that LLMs are, at their core, mathematical systems with numerical value representation reframes this question: the power lies not in the model but maybe in how we use it.

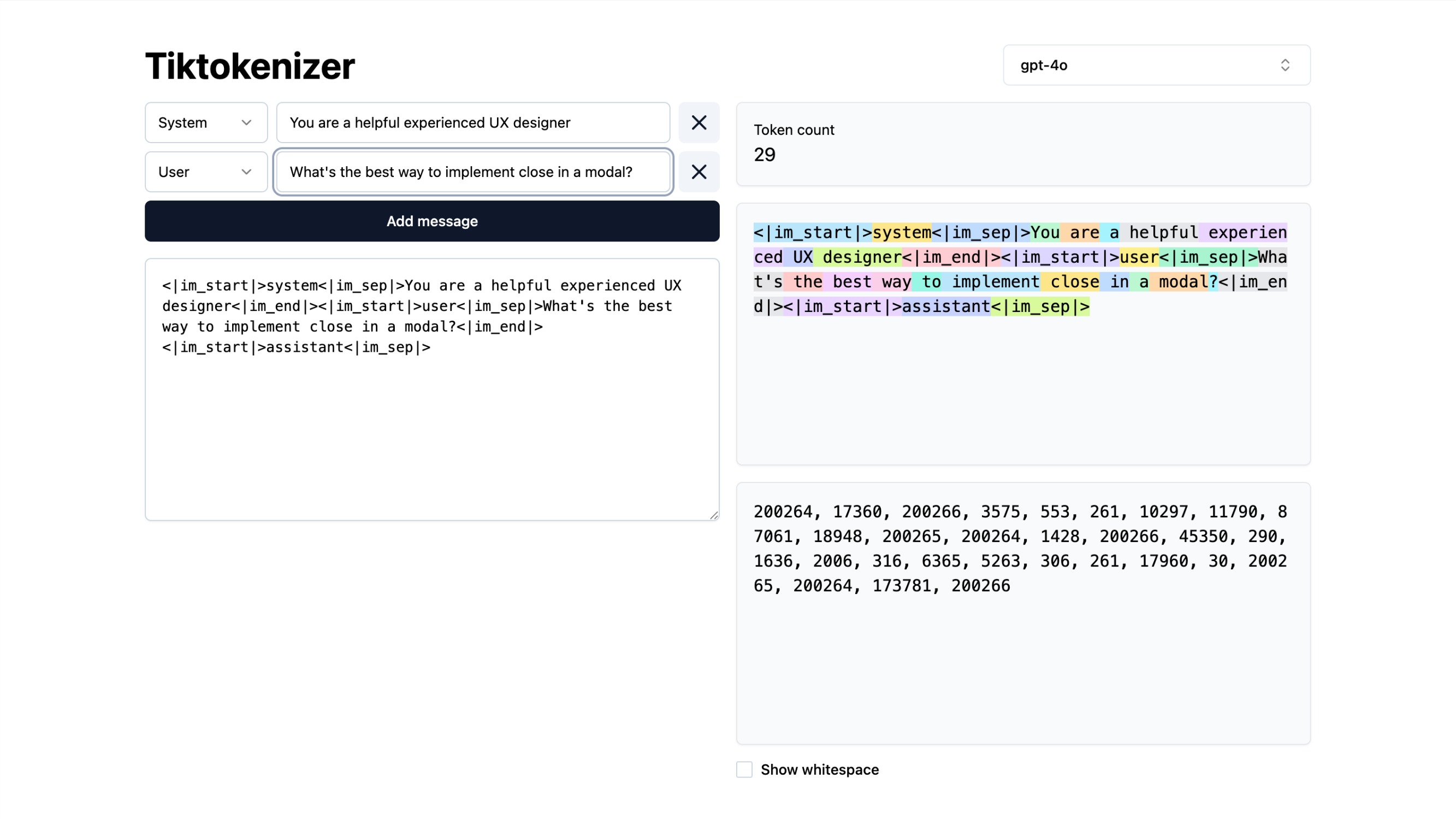

Once I understood that interaction happens within a bounded context window, I began asking: How can I design prompts that lead to more meaningful outcomes? Could I prompt it in such a way I get closest information to what I am looking for.

That question led me to explore prompt engineering... the no-code, no-math way of finding the right “vector.” What's nice is we now see many prompting techniques resources already shared online.

Prompt engineering is the no-code way of finding the nearest vector, designing how we communicate with AI systems without the mathematical calculation. It’s not just influencer advice but there’s solid research behind what makes prompts effective.

Prompt engineering is interaction design for intelligent systems. You’re designing not pixels — but reasoning patterns, tone, and collaboration between human and machine.

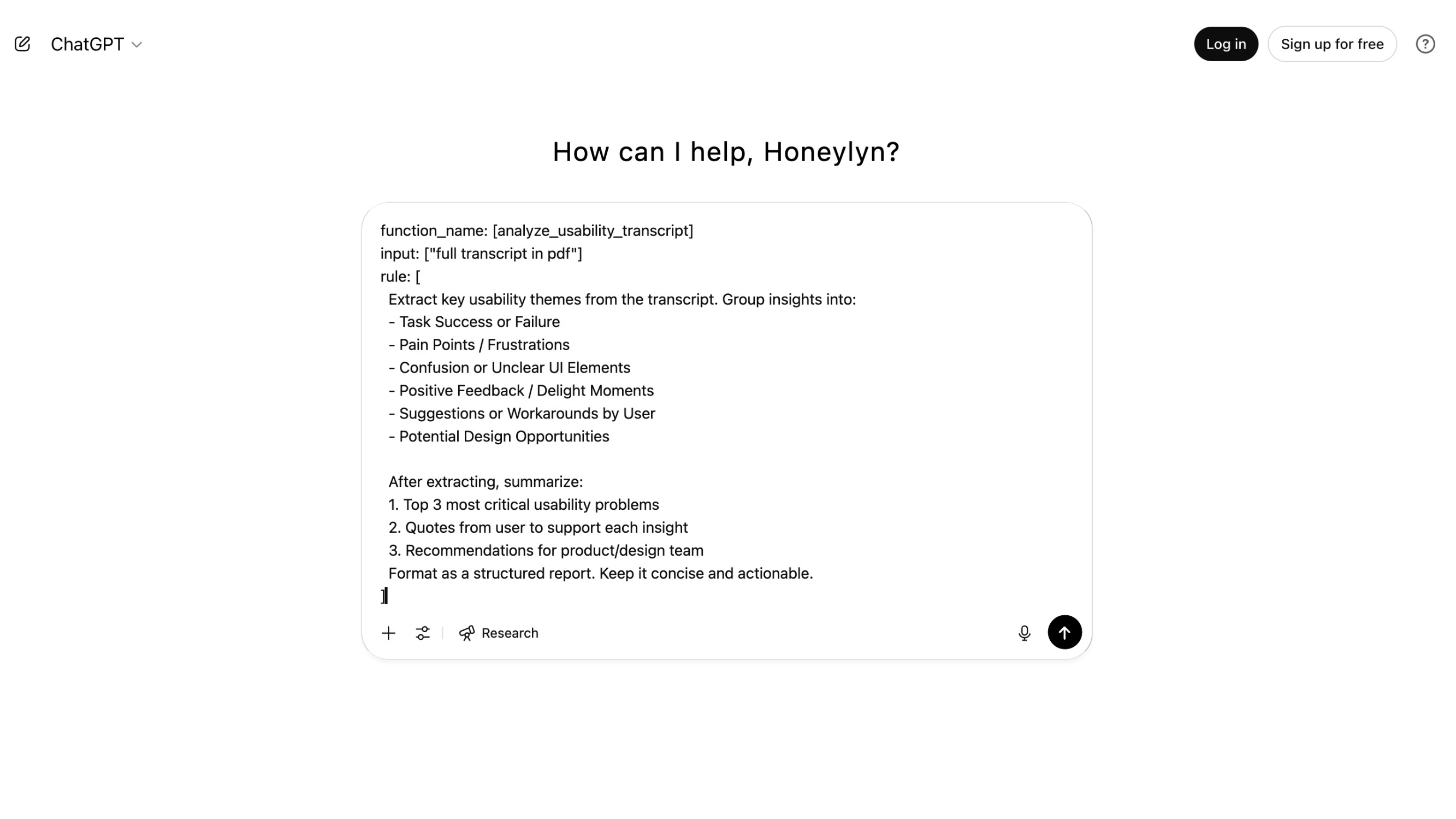

You can use tools like Copilot, ChatGPT or Claude to analyze your transcripts depending on what your company officially uses. However, prompt engineering remains essential, no matter which tool you use.

.jpg)

Is AI really asking us to take a side? After doing this research for my topic, I realized that AI isn’t asking us to take sides. It is asking us to "design" better questions. Understanding that it’s math (not mind) lets us use it more intentionally.

AI may not truly think, but it helps us reflect and that is where real design happens in this new age of AI, through mindful prompt formatting techniques.

Knowing now that every word and phrase carries weight and value reminds us that this isn’t about machines overtaking our collective consciousness... it is about learning how to think with it.