This topic was part of Harver’s Weekly Design Talks that I prepared for our Product and Engineering teams. It was inspired by Princeton University’s research titled “Help Me Help the AI: Understanding How Explainability Can Support Human–AI Interaction.” The paper caught my attention because it reframed explainability not as a technical challenge but as a question of how real users understand and collaborate with AI. I’ve adapted the content for this article while preserving the central themes and added examples from the fintech domain to maintain confidentiality.

The inspiration for this talk comes from a research paper presented at CHI 2023 titled “Help Me Help the AI: Understanding How Explainability Can Support Human–AI Interaction”. The study was conducted by Princeton University researchers. They have looked into how users of an AI-powered bird identification app think about and use explanations.

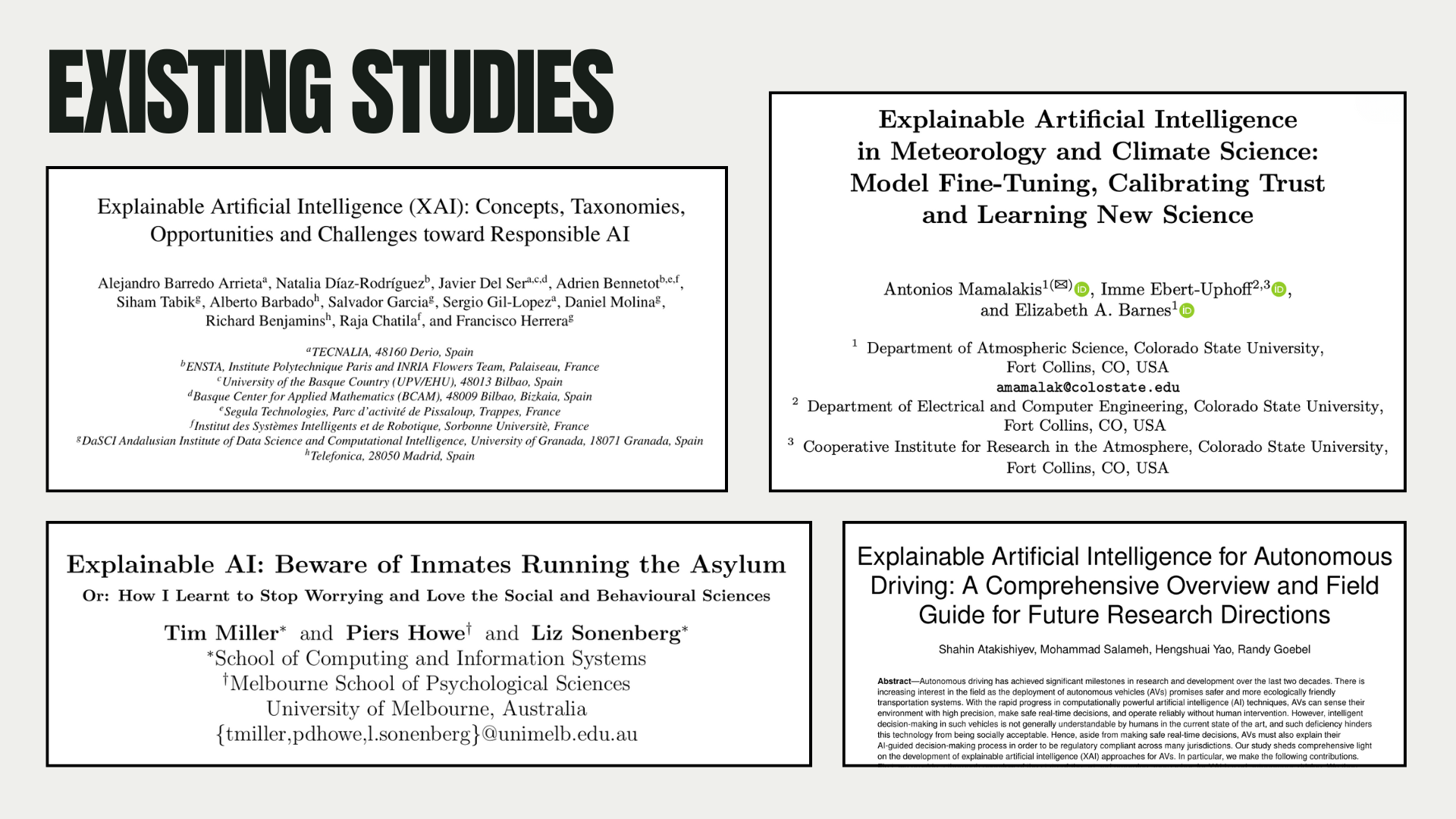

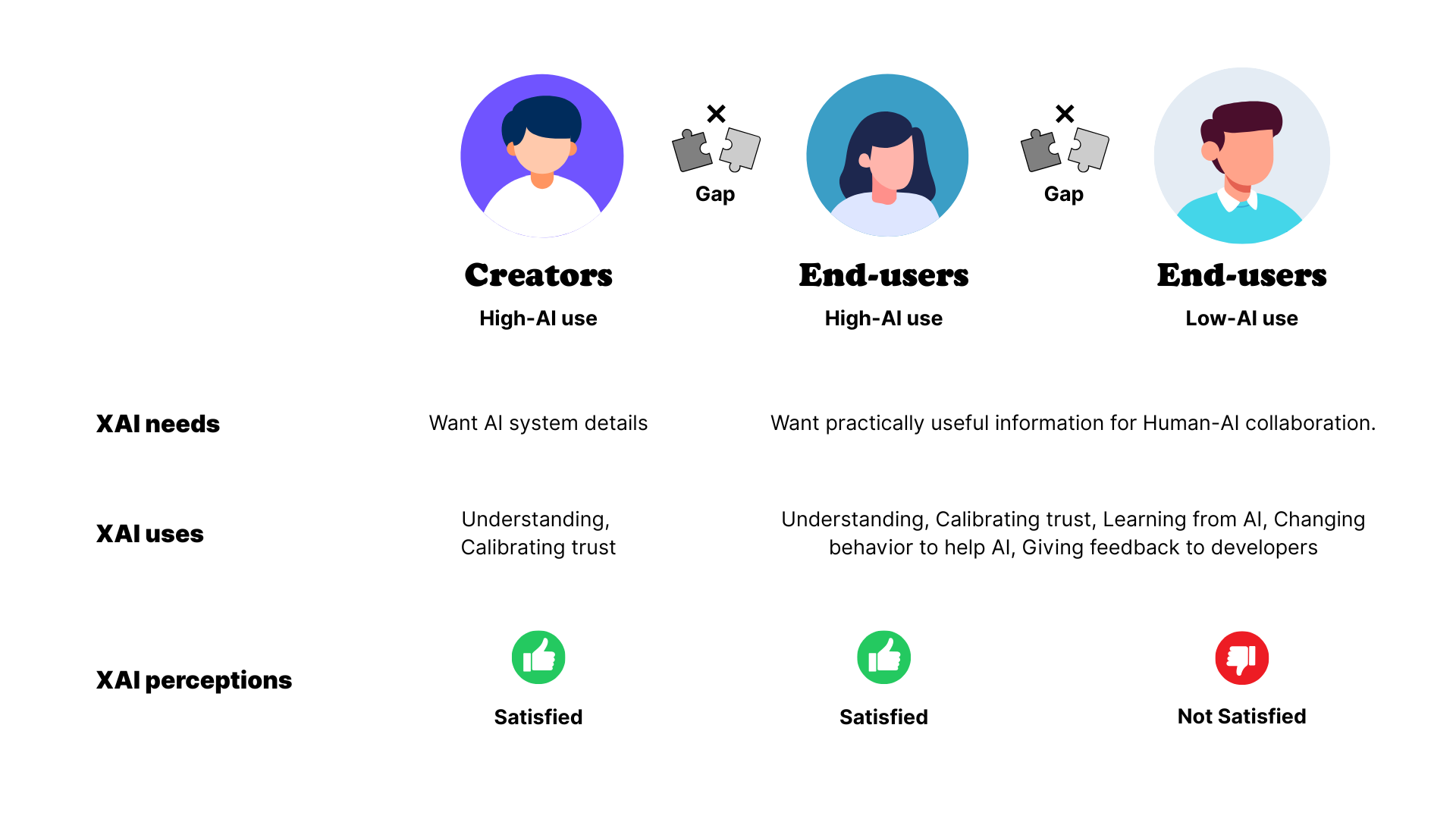

Over the past decade, there has been a lot of research around Explainable AI (XAI) from visualization techniques to interpretability frameworks. This field is not without critique though. Some argue that much of that work is overly focused on supporting researchers and model developers (for debugging, auditing or validating models) rather than serving the people who rely on AI outputs.

This critique raises an essential question: Are we designing explanations to help humans understand AI or merely to help AI justify itself?

For designers, this is an opportunity to contribute to a more ethical, human-centered approach to explainability... one that prioritizes usability and trust.

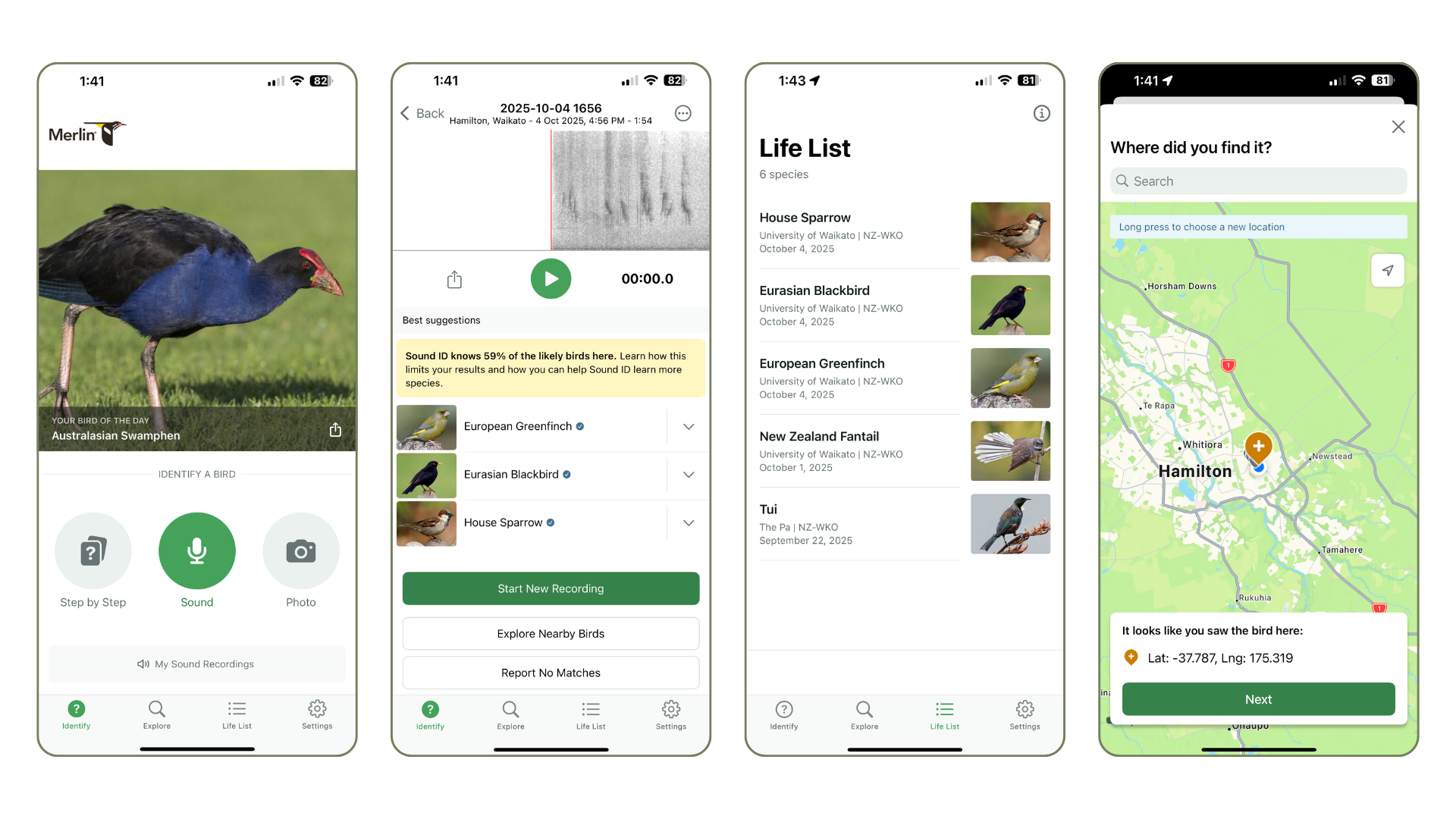

To understand how explanations affect trust and usability, the researchers explored real AI end-users in a bird identification app called Merlin Bird ID created by Cornell University. They wanted to understand what kinds of explanations actually improve trust, usability, and collaboration between humans and AI.

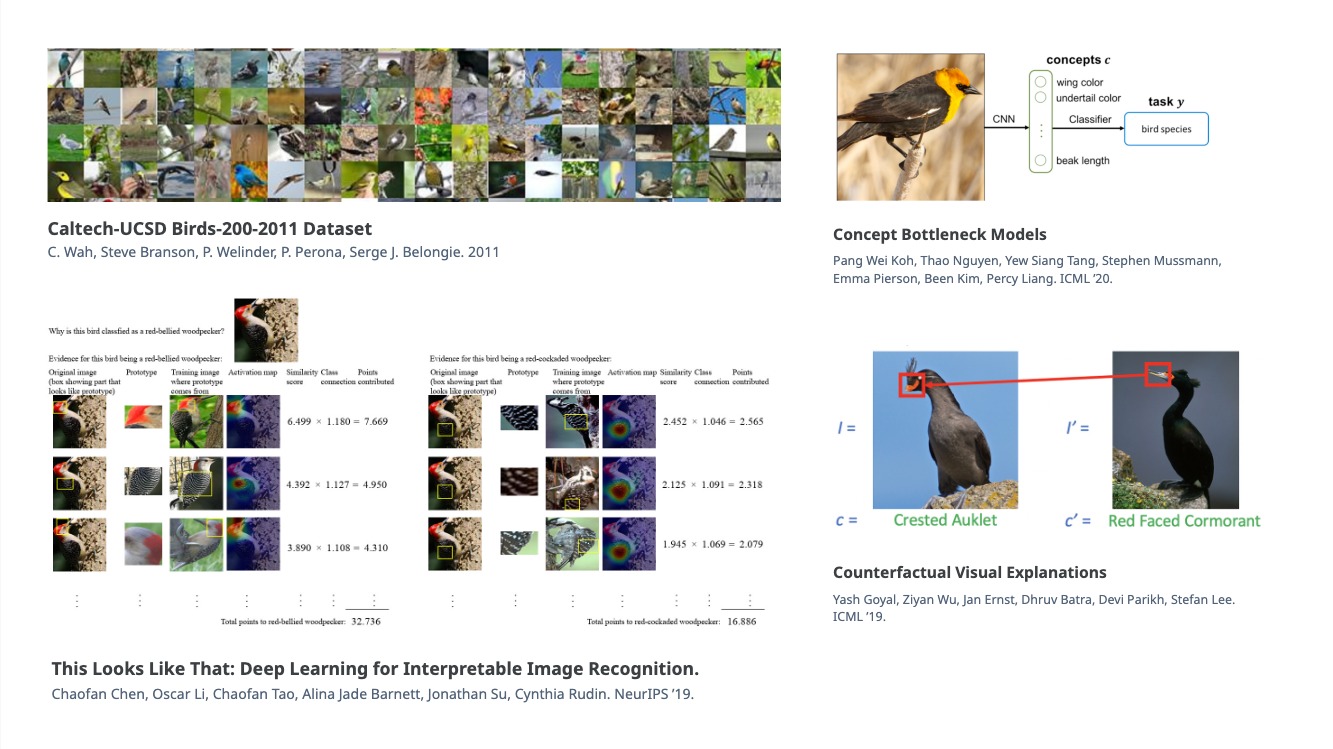

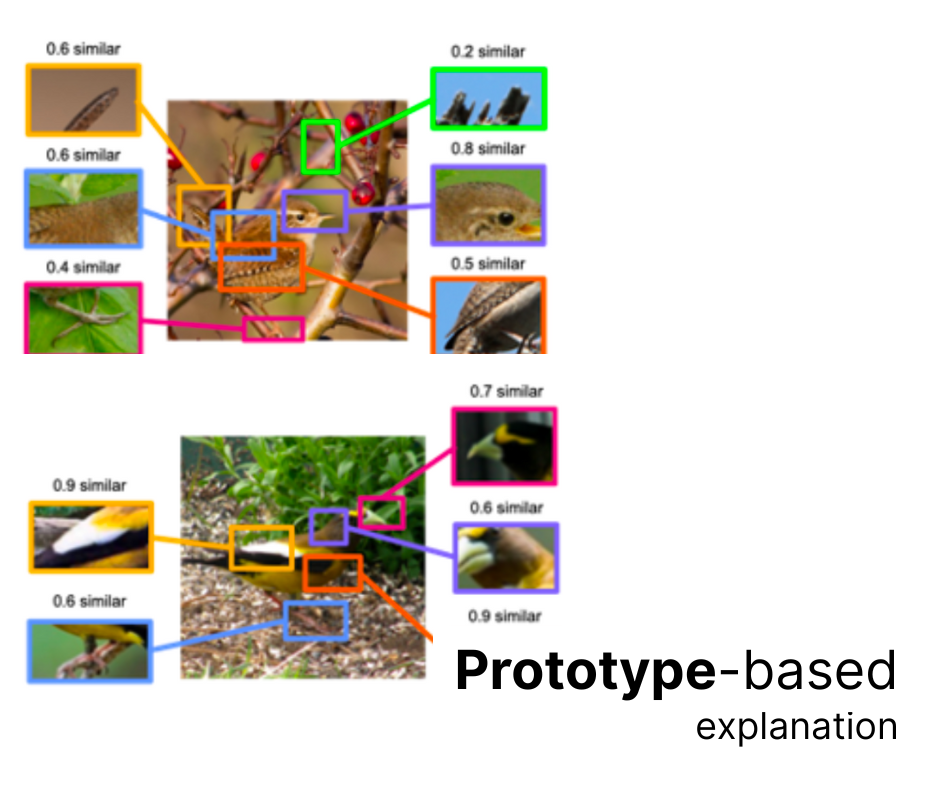

Bird identification was chosen as the study domain by the researchers because it already has deep technical groundwork in XAI research, particularly in computer vision and model interpretability.

Bird image classification using the Caltech–UCSD Birds (CUB) dataset is among the most common benchmarks in explainability research. Moreover, birdwatching is a low-stakes, widely practiced activity that attracts users with diverse backgrounds from casual nature enthusiasts to professionals, with varying levels of AI literacy.

This diversity enabled the researchers to examine how explainability needs differ across users, aligning with the goals of human-centered XAI. Thus, it made clear sense for the researchers to select this domain.

Merlin was specifically chosen by the researchers because it’s a real-world, AI-powered bird identification app that combines technical depth (computer vision and audio models) with human diversity (users of varying expertise).

Users upload a photo or sound and the app returns likely bird matches with example images, sounds and contextual cues such as location or season, making it an ideal setting to study how people interpret and interact with AI explanations.

It is both available in iOS and Android.

■ Download iOS here

■ Download Android here

The study found that participants didn’t just want to understand AI but they wanted to collaborate with it.

They used explanations to:

■ calibrate trust (know when to believe the AI)

■ learn to identify birds better themselves

■ supply better inputs to help the AI improve

■ give feedback to developers.

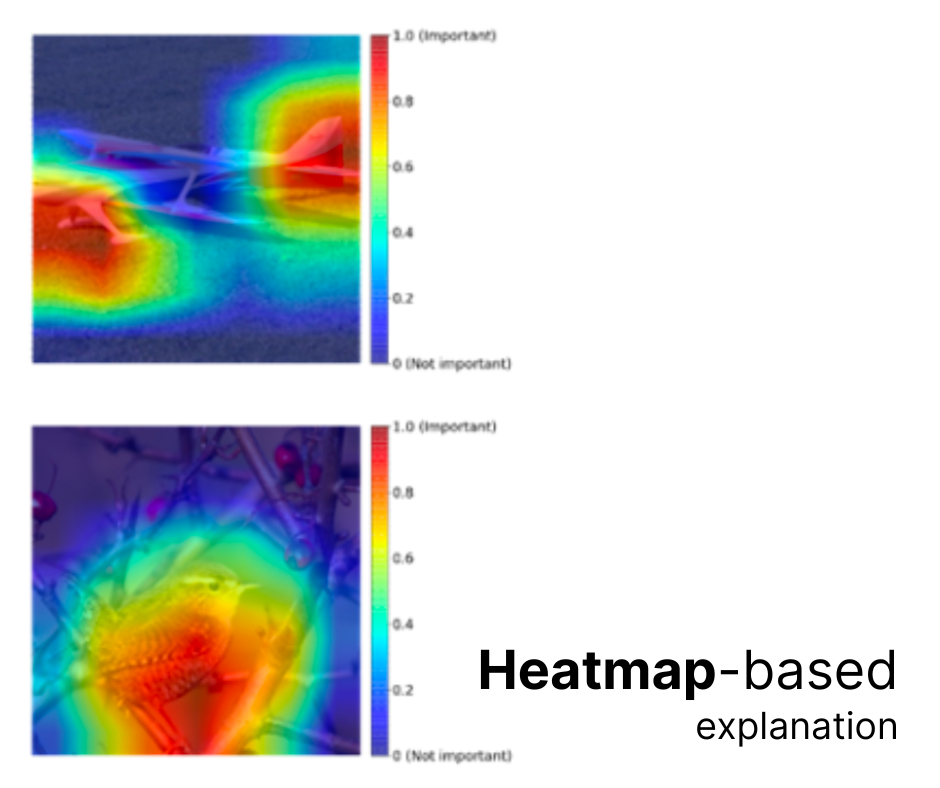

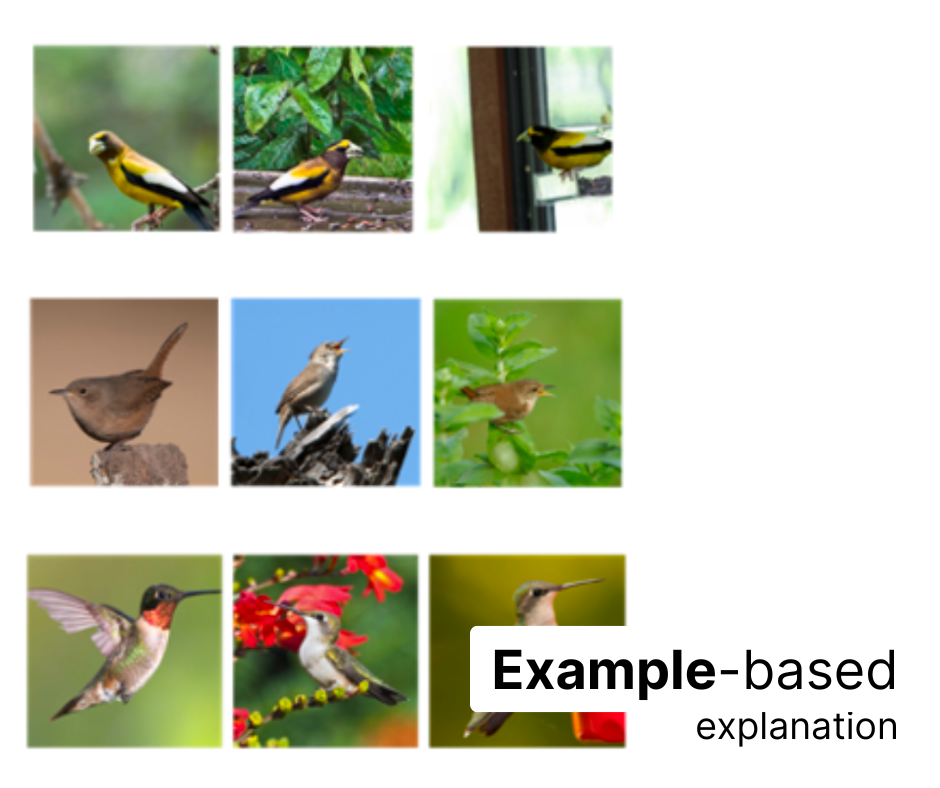

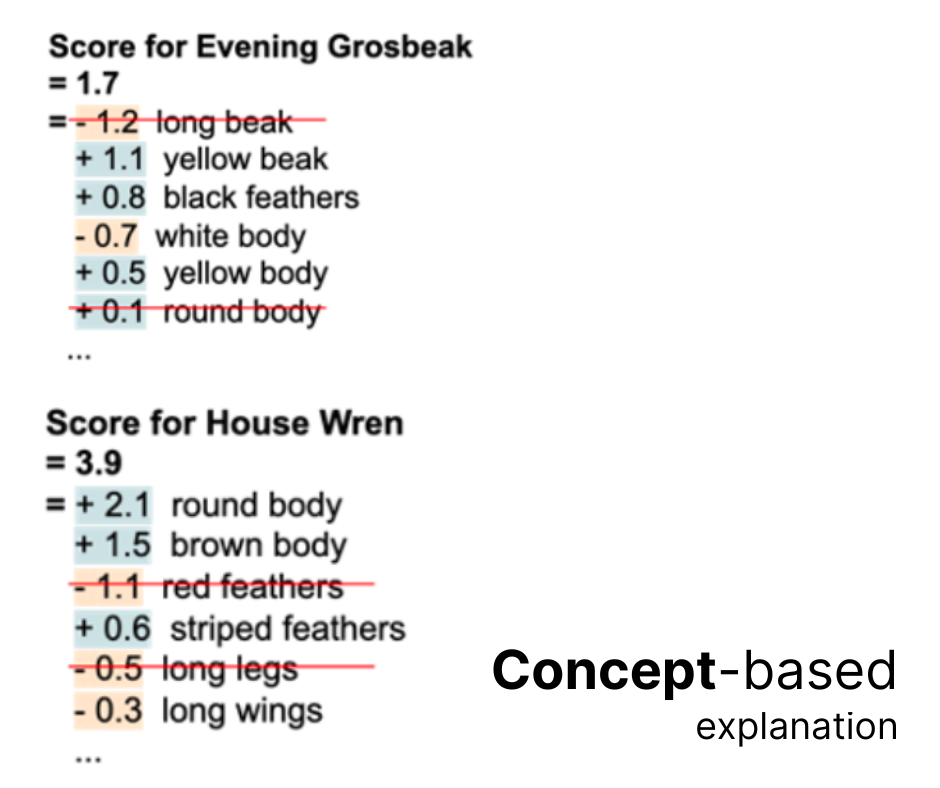

They preferred part-based explanations (concepts and prototypes) that mirror human reasoning, over abstract visualizations like heatmaps.

In the context of loan approvals, when banks presents a loan decision, it can provide clear, actionable and contextual explanations like this:

“Your loan application was declined because your income-to-debt ratio is below 35%. Paying down $1,200 of existing debt or increasing your monthly income by $400 could strengthen your eligibility.”

By showing the actionable insights on how to increase changes of getting a loan approval, this will improve human-AI interaction.

From banking context, people don’t just want to understand AI decisions, they want to be an active participant in improving it. In this case, chances of getting an approval.

When AI acts as a financial collaborator for your investment funds, explanations empower the user to learn, adjust and invest smarter together with the system (or in this case the bank). Explanations like this:

“We’ve suggested a conservative portfolio because your recent adjustments show low risk tolerance and 40% of your funds are in stable assets. By increasing your risk tolerance from 3 to 5 would open access to higher-yield options similar to your past successful investments.”

Allowing a “What if I…” mode for users to simulate changes in their risk level, investment duration, diversification. This mirrors Merlin users modifying their photo inputs to help the AI improve recognition.

When AI automatically categorizes business expenses from uploaded receipts or bank feeds. While it saves time, users often correct misclassified items without understanding why the AI got it wrong in order to prevent it next time.

“This transaction was categorized as Office Supplies because 80% of similar purchases from ‘OfficeMax’ and ‘Paper Plus’ were labeled this way.”

If the user changes it to Marketing Expense, the interface follows up with:

“Thanks! We’ll remember this pattern for future transactions from similar vendors. You can also tag this expense to train your ‘Marketing’ category rules.”

Like Merlin users who adjusted their inputs to help the AI identify birds more accurately, users of the app can teach the system through meaningful feedback by transforming expense automation into a collaborative, trust-building workflow.

When AI helps in forecasting as a tool to predict future cash flow using invoice trends, payment patterns, and spending behavior. While these predictions are useful, many users see only a number or chart without understanding why that outcome is projected or how they can influence it.

“Your forecast dips in Week 3 because two large invoices from Client A are typically paid 20 days late.”

Users can explore “what-if” adjustments like “What if I remind Client A earlier?” or “What if I switch billing cycles to weekly?”. By adjusting these variables, the forecast dynamically updates turning explainability into interactive learning.

When online banks enables users to send money internationally with transparent fees and real-time exchange rates. Yet, while users see a “You’ll receive X amount” summary, many don’t understand why rates fluctuate or how total costs are determined. This lack of clarity limits user trust and perceived control even in a transparent system.

“Here’s how we calculated your total:

- Exchange rate: Based on the mid-market rate at 1:45 PM GMT (+0.22% fluctuation from last hour).

- Fee breakdown: 0.45% bank service fee + fixed $1 transfer cost.

- Speed factor: Estimated 3 hours. Destination bank processes transfers in daily batches."

Just as Merlin users learned how to help the AI identify birds more accurately, online bank users can learn to help the system by choosing better timing, understanding volatility, and ultimately co-navigating the complex world of currency exchange with AI.

The real value of Explainable AI lies not in merely showing what the system did, but in helping people work better with it. The “Help Me Help the AI” study reminds us that users don’t just seek transparency but they seek collaboration.

When explanations guide user action and learning, they create a feedback loop where:

■ Humans gain confidence and literacy

■ AI gains richer, context-aware feedback to improve

Whether in birdwatching or banking, the future of XAI is collaborative where systems don’t just explain themselves but learn with us to make decisions more transparent, trustworthy, and human.